Decompiling Duden dictionaries

Duden is the largest producer of digital German dictionaries. Unfortunately, they are distributed in an obscure proprietary format, meant to be used with Duden-Bibliothek software.

My goal was to reverse engineer the format and write a converter to text-based DSL, supported by both GoldenDict and ABBYY Lingvo (through the official compiler).

In this blog I describe interesting parts of the Duden format and what problems I encountered while trying to translate it into DSL.

Overview

Generally speaking, a dictionary consists of headings and articles. Each article might reference other headings, pictures, tables and sound files.

There are two primary steps in converting a Duden dictionary:

- Decompile the binary files and get your hands on Duden markup in which the articles are written.

- Translate Duden markup into DSL.

The first step was mostly deterministic and involved reverse engineering the data structures, compression and the sound format.

The second step required some creativity in finding the best way of approximating Duden markup features with DSL markup, which is rather limited in comparison.

Consider this example article:

It’s written in Duden markup language:

@C%ID=785866893

@1An|ti|mo|n\u{i}t \S{;.Ispeaker.bmp;T;ID4424454_498520938.adp} @0[auch: …'nɪt \S{;.Ispeaker.bmp;T;ID4424453_386912901.adp}] @2der; @0-[e]s: \\

@8\\

@9

(meist als »Antimonglanz« od. »Grauspießglanz« bezeichnetes wichtigstes) Antimonerz

Notably, it references two sound files and uses text formatting. Here is one way of translating this article into DSL:

[b]An|ti|mo|n[u]i[/u]t [s]ID4424454_498520938.wav[/s] [/b]\[auch: …'nɪt [s]ID4424453_386912901.wav[/s]\] [i]der; [/i]-\[e\]s:

(meist als »Antimonglanz« od. »Grauspießglanz« bezeichnetes wichtigstes) Antimonerz

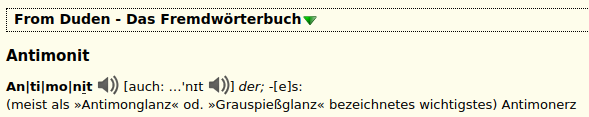

Rendered by GoldenDict it looks like this:

Here is a data flow diagram showing how a Duden dictionary is converted into a DSL dictionary.

I won’t go into details of each step, but instead describe the parts that I found most interesting.

Modified DEFLATE

All text and many resources in a Duden dictionary are stored in archives. Each archive is a pair of IDX and BOF files.

BOF consists of many small blocks, each of which is then independently compressed. Once compressed, the length of each block is variable, and the IDX file stores the BOF file offsets of the blocks. The decompressed data in each block is exactly 0x2000 bytes long (except the last one).

The compression algorithm used to compress the blocks is called DEFLATE. It’s a well known algorithm, most notably used in PNG images and ZIP archives.

DEFLATE itself splits the uncompressed data into blocks and compresses each DEFLATE block independently using one of the following modes:

- No compression. It’s used when other modes produce even worse result. Very rarely used in practice.

- Compression using dynamic Huffman trees, that are constructed based on the data in the block. The resulting tree is appended to the compressed data. This is the primary mode of DEFLATE.

- Compression using static Huffman trees, that are built-in into the DEFLATE compressor itself and are independent of data. This mode is used when the developer of compressor knows beforehand what kind of data will be compressed and can construct a Huffman tree for that data. The savings come from omitting the Huffman tree from the block.

When I first discovered the use of DEFLATE, I decompressed it using the bzip library and thought I was done. But I soon found that some BOF blocks were apparently corrupted and bzip reported errors. I looked closer and the problem turned out to be with the third type of DEFLATE blocks.

Bzip, as the majority of other available DEFLATE decompressors, implements RFC 1951, which specifies exactly what static Huffman trees are to be used.

Duden decided to generate its own Huffman tree for static DEFLATE blocks. That meant I had to extract the tree and modify a DEFLATE decompressor to use it.

I find this customization of DEFLATE to be a classic example of overengineering. Not only the static DEFLATE blocks are very rarely used in Duden data, but the same Huffman tree is used for both text and binary resources, that have little in common. This might have been different in the past, though.

Text encoding

Today Unicode rules the world and it’s becoming increasingly difficult to find any other encoding used in the wild. So it was surprising that Duden didn’t use Unicode. More surprising still is that it didn’t use any encoding I could recognize.

Duden encoding is a variable-length encoding that is a mixture of win1252, Unicode codepoints and special cases for certain symbols.

Another complication is that Duden markup and the use of this encoding are intermixed. Text inside certain tags is encoded in fixed 8-bit win1252 encoding, which is incompatible with the Duden encoding outside such tags.

I don’t see much reason in explaining the encoding in detail. It’s most definitely a historical curiosity, developed at the time when Unicode wasn’t widespread and Duden dictionaries were limited to German characters.

See function dudenToUtf8 in the lsd2dsl repository for exact mapping to Unicode.

Tables

Duden markup has advanced support for tables. Among the features are:

- merged cells

- cell alignment

- text formatting

- images

- nested tables inside cells

These features need to be emulated in DSL, as it doesn’t have any support for tables. I could either convert Duden tables into plain html and rely on the dictionary viewer to render them using a non-standard DSL extension. Or, prerender all the tables and include them as bitmap images. I chose the second option.

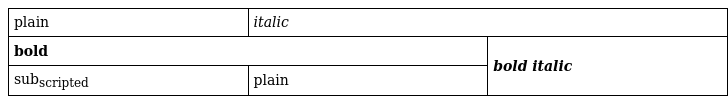

Take this table as an example:

\tab{\tcn3\tln3\tau

\tccplain

\tcc@2italic

\tcc\ter

\tcc@1bold

\tcc\ter

\tcc@3bold italic

\tccsub\sub{scripted}

\tccplain

\tcc\ted

\tcc}

The table contains merged cells and text formatting. This is an html translation:

<table>

<tr>

<td style="width: 33%;" colspan="1" rowspan="1">plain</td>

<td style="width: 66%;" colspan="2" rowspan="1">

<i>italic</i>

</td>

</tr>

<tr>

<td style="width: 66%;" colspan="2" rowspan="1">

<b>bold</b>

</td>

<td style="width: 33%;" colspan="1" rowspan="2">

<b><i>bold italic</i></b>

</td>

</tr>

<tr>

<td style="width: 33%;" colspan="1" rowspan="1">sub

<sub>scripted</sub>

</td>

<td style="width: 33%;" colspan="1" rowspan="1">plain</td>

</tr>

</table>

Which, using a simple style sheet, can be rendered like this:

The fact that tables contain arbitrary text formatting meant that my converter needed to convert a good chunk of Duden markup to both DSL and html.

Broken dictionaries

For some reason Duden dictionaries sometimes contain bad markup. There are syntax errors like a tag without the closing brace. Or a web reference (a url plus description) that contains characters that are not allowed inside a reference. These types of errors are not handled by the Duden-Bibliothek either. I don’t know how Duden dictionaries are originally authored, but I presume the Duden editor doesn’t do proper validation before compiling a dictionary.

Simply discarding badly formatted articles would be inappropriate given their number. Instead, my parser is somewhat fuzzy about handling bad formatting and tries to recover from certain types of errors. The decision to ignore formatting errors meant that the easiest way to write the parser was by hand, without using a parser generator. When writing parsers manually it’s often easier to insert ad hoc error handling.

Another curious problem is when the whole dictionary structure is broken. For example, the headings of a dictionary are stored in a search tree structure. And I’ve seen a dictionary where the tree wasn’t actually sorted and contained wrong offsets into the article archive. Duden-Bibliothek failed to parse that dictionary and I couldn’t find a way to restore it.

Sound encoding

Sound files are encoded using the Adaptive Differential Pulse CodeModulation (ADPCM) encoding. It’s a lossy codec optimized for voice. Thankfully, a decoder is very small and can be easily implemented without an external library.

I don’t like lossy-to-lossy conversions, so I decode all sound files into lossless WAV. Given that all resources are repackaged into ZIP, the size inflation is at least partially mitigated.

Conclusion

I added the new Duden decompiler to lsd2dsl, because that way I could reuse some code. I’m not yet comfortable calling the converter complete, but I’m happy with the result. Additional improvements should be easy enough to make on top of the existing architecture.